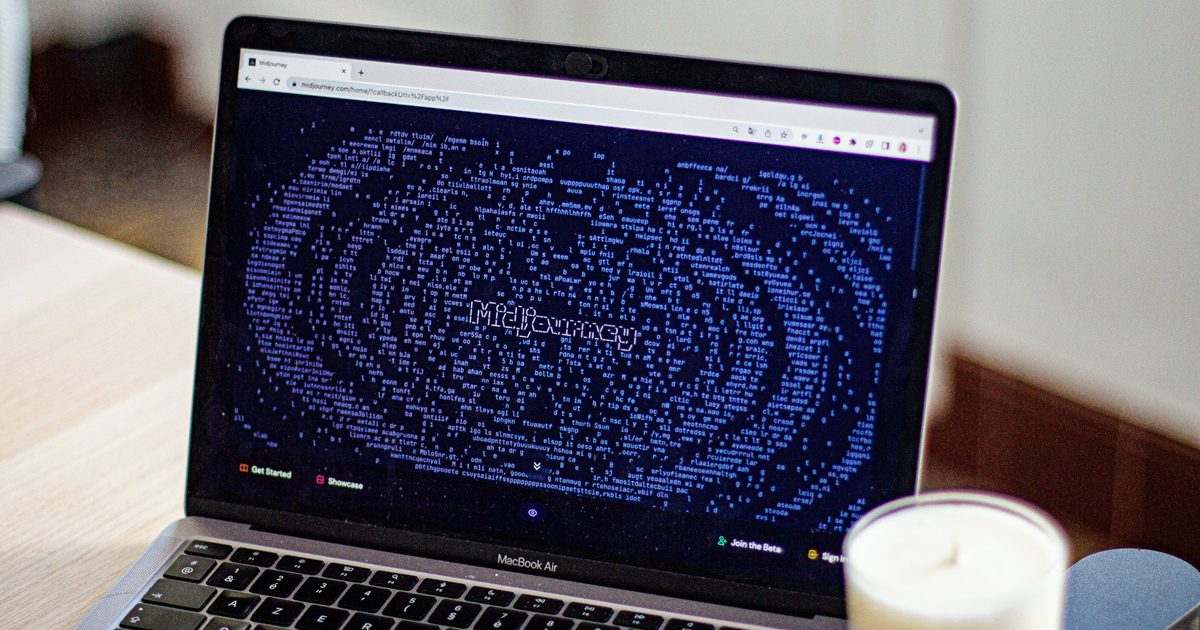

AI systems such as image generators are trained using freely accessible data from the internet, among other things, which many artists feel infringes their copyright. Now there is a tool with which they can not only protect themselves, but even damage the AI models.

According to heise.de, the tool called "Nightshade" was presented back in autumn and is now actually available: It was developed at the University of Chicago. According to the editors, the scientists already have a similar tool called "Glaze" on offer:

"Whereby the predecessor aims to allow artists to protect their works. Specifically, by distorting their images in such a way that AI models are unable to learn or even reproduce their style. To do this, pixels are changed - invisible to the human eye, but something else is created for the machine."

Nightshade should take the whole thing a little further, heise.de continues: "If you use this tool and the crawlers get past your own works despite the ban, the training data will be poisoned. If the image shows a wallet instead of a cow and is still labelled as a cow, the model learns to show wallets when someone actually wants to see a cow via a prompt."

This could damage the entire AI model - for all users. In tests with a proprietary version of the Stable Difussion image generator, for example, 50 poisoned images were enough for dogs to start looking funny; 300 manipulated images later, dogs turned into cats.

You can read more about both tools, how they work and their potential uses here (in German):

Most Popular